After almost eight decades of learning new stuff whether I needed to or not, I’ve finally come across ChatGPT, the topic du jour of coffee conversations everywhere. It has taken a full week of online research to find out, much less begin to comprehend, what ChatGPT is and what it is not.

ChatGPT is a bot, a software application programmed to do certain tasks. Bots often imitate or replace a human user’s behaviour.

Bots are automated, which means they run according to their instructions without a human user needing to manually start them up every time. Bots often imitate or replace a human user’s behaviour — and here is where it gets really interesting.

A chatbot is a computer program that simulates human conversation through voice commands or text chats or both. Chatbot is short for chatterbot, an artificial intelligence (AI) feature that can be embedded and used through major messaging applications.

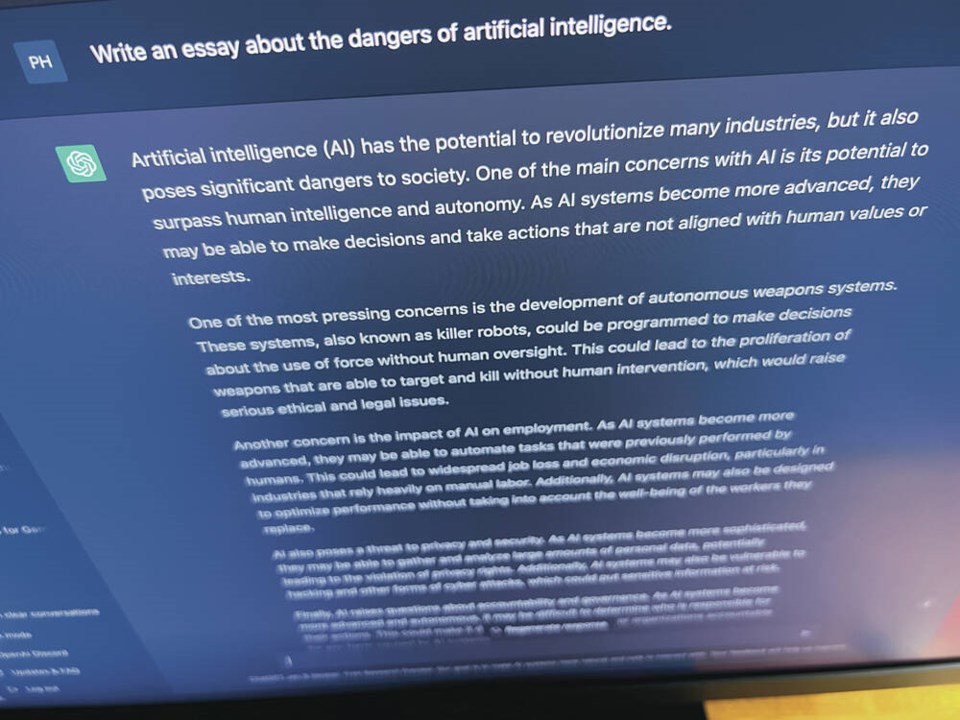

So far so good, sort of anyway, because the latest chatbot is ChatGPT, a chatbot that responds to just about any question, often in a startlingly convincing way.

And here’s where the whole thing becomes a bit scary for us previously indispensable high school teachers, or worse still, retiree wannabe column writers.

ChatGPT can respond to follow-up questions, write and debug programming code, tweet and write in the style of a particular author.

According to the hundreds of reviews of ChatGPT that have flooded the internet, ChatGPT is able to come up with information about almost anything. But it is not infallible and sometimes spits out hilarious bloopers, producing believable but wrong answers with such conviction that only an expert can spot the artifice.

There is already evidence that students at both the secondary and post-secondary levels are turning to ChatGPT to write their essays, despite experts advising against such use.

Emma Bowman, a commentator with National Public Radio in the U.S., wrote of the danger of students plagiarizing text through an AI tool that provides biased or nonsensical information, but still delivers answers with an authoritative tone: “There are still many cases where you ask it a question and it’ll give you a very impressive-sounding answer that’s just dead wrong.”

Arvind Narayanan, a computer science professor at Princeton University, summed up things in the title of his blog post: “ChatGPT is a bullshit generator. But it can still be amazingly useful.”

In The Atlantic magazine, Stephen Marche, Canadian novelist, essayist and cultural commentator, noted that “its effect on academia and especially application essays is yet to be understood.”

More pessimistic observers such as California high school teacher and author Daniel Herman claim that ChatGPT could usher in “The End of High School English.”

Some opinion pieces are even more ominous than that.

Writing for the Harvard Business Review, Ethan Mollick, an associate professor at the Wharton School of the University of Pennsylvania where he teaches innovation, sees ChatGPT as a “tipping point for artificial intelligence.”

Ben Dickson, software engineer and the founder of the website Tech Talks, cautions against attributing human characteristics to forms of artificial intelligence, warning that: “Anthropomorphizing AI is a problem that has been all too common in the history of computers and artificial intelligence,” and adding that “this kind of thinking can lead to wrong interpretations of technological achievements and unrealistic expectations of AI innovations.”

People have also been having fun with ChatGPT.

Since its release, Twitter has been flooded with examples of people using it for strange and absurd purposes: writing weight-loss plans and children’s books and even, in one notable instance, offering advice on how to remove a peanut butter sandwich from a VCR in the style of the King James Bible.

There are also multiple reports of early adopters using ChatGPT for more serious purposes such as using it to help write basic consulting reports and lectures.

And in the category of what logically comes next, Edward Tian, a senior undergraduate student at Princeton University, said he has built an app named GPTZero that sniffs out whether an essay is human written or not, perhaps bearing in mind what science fiction writer Arthur C. Clarke wrote in 1962: “Any sufficiently advanced technology is indistinguishable from magic.”

Geoff Johnson is a former superintendent of schools.

>>> To comment on this article, write a letter to the editor: [email protected]